Automated Analysis and Quantification of Human Mobility Using a Depth Sensor Featured

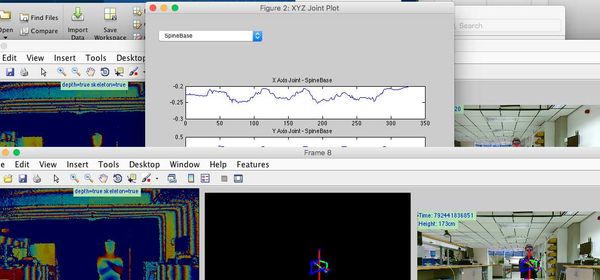

A while back I posted about the article Automated Analysis and Quantification of Human Mobility Using a Depth Sensor which was submitted to Journal of Biomedical and Health Informatics. The article was accepted and recently gone into press. What is even better is that the article has been selected to feature for its section, Sensor Informatics!

Here is the abstract:

Analysis and quantification of human motion to support clinicians in the decision-making process is the desired outcome for many clinical-based approaches. However, generating statistical models that are free from human interpretation and yet representative is a difficult task. In this paper, we propose a framework that automatically recognizes and evaluates human mobility impairments using the Microsoft Kinect One depth sensor. The framework is composed of two parts. First, it recognizes motions, such as sit-to-stand or walking 4 m, using abstract feature representation techniques and machine learning. Second, evaluation of the motion sequence in the temporal domain by comparing the test participant with a statistical mobility model, generated from tracking movements of healthy people. To complement the framework, we propose an automatic method to enable a fairer, unbiased approach to label motion capture data. Finally, we demonstrate the ability of the framework to recognize and provide clinically relevant feedback to highlight mobility concerns, hence providing a route toward stratified rehabilitation pathways and clinician-led interventions.