Visualizing Tweets with Word2Vec and t-SNE, in Python

I have been looking at methods to handle large datasets of high-dimensional data for visualization. There are many methods available (ie. PCA, Kernel PCA, Autoencoders, see this Google for a more), but the skill is selecting the right method for the job.

There are many algorithms for dimensionality reduction, but one has become my go to method. t-SNE is an algorithm for dimensionality reduction that is great for visualising high-dimensional data. t-SNE stands for t-distributed Stochastic Neighbor Embedding. The goal is to embed high-dimensional data in low dimensions in a way that respects similarities between data points. Nearby points in the high-dimensional space correspond to nearby embedded low-dimensional points, and distant points in high-dimensional space correspond to distant embedded low-dimensional points.

This post is designed to be a tutorial on how to extract data from Twitter and perform t-SNE and visualize the output. Credit for inspiration to this post goes to Andrej Karpathy who did similar in JavaScript.

To get started, you need to ensure you have Python 3 installed, along with the following packages:

- Tweepy: This is a library for accessing the Twitter API;

- RE: This is a library to handle regular expression matching;

- Gensim: This is a library for topic modelling;

- Sklearn: A library for machine learning and standard techniques;

- Matplotlib: This is a library which produces publication quality figures;

With that done, let's get on with the fun!

Side note. Jupyter Notebooks are a great way to do quick (and dirty) coding. Check them out here.

Extracting Twitter data using Tweepy

The first stage is to import the required libraries, these can be installed via pip.

# Import core libraries

import tweepy # Obtain Tweets via API

import re # Obtain expressions

from gensim.models import Word2Vec #Import gensim Word2Fec

from sklearn.decomposition import PCA #Grab PCA functions

#Plot helpers

import matplotlib

import matplotlib.pyplot as plt

#Enable matplotlib to be interactive (zoom etc)

%matplotlib notebook

To extract Twitter Tweets, you need a Twitter account, and a Twitter Developer account. You will need to extract the following details from the Twitter Developer pages for the specific app:

- Consumer Key (API Key)

- Consumer Secret (API Secret)

- Access Token

- Access Token Secret

And save in a file called credentials.py, example as follows:

# Twitter credentials.py

# cconsumer (your) keys

CONSUMER_KEY = ''

CONSUMER_SECRET = ''

# Access tokens

ACCESS_TOKEN = ''

ACCESS_SECRET = ''

Why create this extra file I hear you ask? It is important to keep 'hidden' the confidential access token and secret key. Never include this information in the core of your code. Best practise to abstract away these credentials or store them as environment variables.

With the access tokens stored in credentials.py, we can then define a function and import the access tokens (e.g. from credentials import) to enable the request to be authenticated via the Twitter API.

# Import Twitter access tokens

from credentials import *

# API's authentication by defining a function

def twitter_setup():

# Authentication and access using keys

auth = tweepy.OAuthHandler(CONSUMER_KEY, CONSUMER_SECRET)

auth.set_access_token(ACCESS_TOKEN, ACCESS_SECRET)

# Obtain authenticated API

api = tweepy.API(auth)

return api

So far, we have setup the environment by importing the core libraries and defined the authentication API. It is now time to extract some Tweets!

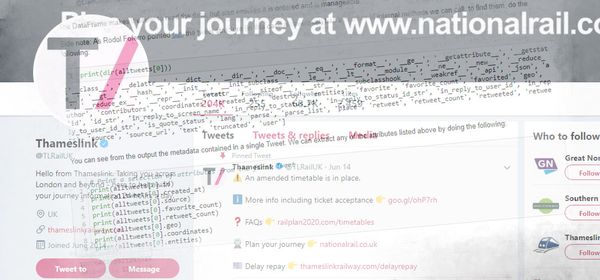

We do this by defining the function get_user_tweets and call the Tweepy Cursor object. It is important to remember that each element in that list is a tweet object from Tweepy.

It is important to be aware that Twitter API limits you to about 3200 Tweets (the most recent ones)!

# We create an extractor object (holding the api data) by calling in our twitter_setup() function

extractor = twitter_setup()

def get_user_tweets(api, username):

"""Return a list of all tweets from the authenticated API"""

tweets = []

for status in tweepy.Cursor(api.user_timeline, screen_name=username).items():

tweets.append(status)

return tweets

alltweets = get_user_tweets(extractor, 'TLRailUK')

print("Number of tweets extracted: {}.\n".format(len(alltweets)))

Number of tweets extracted: 3221.

Let's look at the first 5 (most recent) Tweets.

Tip: It is always wise to explore and view your data!

# We print the most recent 5 tweets for reference

print("5 recent tweets:\n")

for tweet in alltweets[:5]:

print(tweet.text)

print()

5 recent tweets:

@amcyoung An unknown item knocked the show gear off a train ^Neil

✅#TLUpdates - Services are no longer affected following an object on the track near St Albans.

⚠️#TLUpdates - Due to an object on the line near St Albans, southbound services may be subject to delay. Southboun… https://t.co/QVMhjYk3Lt

@Veryoldwreck @transportgovuk Hi there, you can contact our team directly here - 0345 026 4700 - ^Jack

@Veryoldwreck I recommend speaking directly with customer services regarding this cost - https://t.co/z5AZrPWNCm ^Kim

Tokenizing Tweets

Word2vec is a two-layer neural network that is designed to processes text, in this case, Twitter Tweets. It's input is a text corpus (ie. Tweet) and its output is a set of vectors: feature vectors for words in that corpus. Word2Vec converts text into a numerical form that can be understood by a machine.

In this step, we take the Tweets and perform tokenization - transforming the word into a numerical representation - prior to visualizing. We pass each tweet (tw.tweet) to be tokenised, with the output being appended to an array.

#Emoticon strings

emoticons_str = r"""

(?:

[:=;] # Eyes

[oO\-]? # Nose (optional)

[D\)\]\(\]/\\OpP] # Mouth

)"""

#Define the regex strongs.

regex_str = [

emoticons_str,

r'<[^>]+>', # HTML tags

r'(?:@[\w_]+)', # @-mentions

r"(?:\#+[\w_]+[\w\'_\-]*[\w_]+)", # hash-tags

r'http[s]?://(?:[a-z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-f][0-9a-f]))+', # URLs

r'(?:(?:\d+,?)+(?:\.?\d+)?)', # numbers

r"(?:[a-z][a-z'\-_]+[a-z])", # words with - and '

r'(?:[\w_]+)', # other words

r'(?:\S)' # anything else

]

#Assign strings to output

tokens_re = re.compile(r'('+'|'.join(regex_str)+')', re.VERBOSE | re.IGNORECASE)

emoticon_re = re.compile(r'^'+emoticons_str+'$', re.VERBOSE | re.IGNORECASE)

def tokenise(s):

return tokens_re.findall(s)

def preprocess(s, lowercase=False):

tokens = tokenise(s)

if lowercase:

tokens = [token if emoticon_re.search(token) else token.lower() for token in tokens]

return tokens

#Get the tokenized value for each word

tokenised = []

for tw in alltweets:

tokens = preprocess(tw.text)

tokenised.append(tokens)

#Grab the first 5

print(tokenised[0:4])

[['@amcyoung', 'An', 'unknown', 'item', 'knocked', 'the', 'show', 'gear', 'off', 'a', 'train', '^', 'Neil'], ['✅', '#TLUpdates', '-', 'Services', 'are', 'no', 'longer', 'affected', 'following', 'an', 'object', 'on', 'the', 'track', 'near', 'St', 'Albans', '.'], ['⚠', '️', '#TLUpdates', '-', 'Due', 'to', 'an', 'object', 'on', 'the', 'line', 'near', 'St', 'Albans', ',', 'southbound', 'services', 'may', 'be', 'subject', 'to', 'delay', '.', 'Southboun', '…', 'https://t.co/QVMhjYk3Lt'], ['@Veryoldwreck', '@transportgovuk', 'Hi', 'there', ',', 'you', 'can', 'contact', 'our', 'team', 'directly', 'here', '-', '0345', '026', '4700', '-', '^', 'Jack'], ['@Veryoldwreck', 'I', 'recommend', 'speaking', 'directly', 'with', 'customer', 'services', 'regarding', 'this', 'cost', '-', 'https://t.co/z5AZrPWNCm', '^', 'Kim']]

Usually, you can use models which have already been pre-trained, such as the Google Word2Vec model which has over 100 billion tokenized words.

But, let's make our own and see how it looks. The model is trained by passing in the tokenized array, and specific that all words with a single occurrence should be counted. Descriptive outputs are requested after training for reference.

# Train a Word2Vec model

model = Word2Vec(tokenised, min_count=1)

# summarize the loaded model

print(model)

# summarise vocabulary

words = list(model.wv.vocab)

print(words[0:5])

# access vector for one word for reference

print(model['Sorry'])

Word2Vec(vocab=6616, size=100, alpha=0.025)

['@amcyoung', 'An', 'unknown', 'item', 'knocked']

[-5.71213722e-01 9.90668833e-02 -5.74942827e-02 -9.95725468e-02

4.58320946e-01 -2.51360744e-01 -3.71122926e-01 3.55062693e-01

-8.98340195e-02 -1.23867288e-03 -4.39108819e-01 -1.93447508e-02

-4.11524653e-01 3.51479262e-01 -1.45068735e-01 5.47581434e-01

1.76495716e-01 -3.56364131e-01 4.77366388e-01 7.64675513e-02

-7.96833187e-02 6.55818105e-01 -1.72715068e-01 2.86088198e-01

3.31279896e-02 -1.29176363e-01 4.07910794e-01 1.88273460e-01

-5.50167084e-01 -5.13329983e-01 -2.56392658e-01 -1.30526170e-01

3.45739573e-01 1.06925264e-01 -3.88811752e-02 6.80522859e-01

-1.06077276e-01 1.43520638e-01 -7.96001479e-02 -1.96152166e-01

3.28654493e-03 -4.38531458e-01 1.97747543e-01 -6.57617450e-01

-3.63079488e-01 -4.57680672e-01 -1.24889545e-01 -9.28130224e-02

8.51598442e-01 4.80584800e-02 -2.99913347e-01 -1.25507951e+00

2.94373959e-01 -7.62703419e-01 6.34522259e-01 4.75453436e-01

6.48569822e-01 -3.31943870e-01 -5.17366111e-01 2.46216848e-01

7.34380007e-01 -4.48545553e-02 -5.52372992e-01 -4.50296044e-01

2.70809025e-01 -6.19025826e-01 -1.01285450e-01 4.65955317e-01

3.62131521e-02 -4.84789729e-01 -2.20931605e-01 2.67450631e-01

-2.72101432e-01 5.72192192e-01 2.58358091e-01 6.89931631e-01

8.38520676e-02 6.72904968e-01 9.61698174e-01 -5.53342812e-02

4.10266370e-02 -1.97602939e-02 6.64769579e-03 6.53683245e-02

2.20210999e-01 -1.56317329e+00 1.27850986e+00 7.19225481e-02

-4.00944620e-01 4.58989471e-01 -1.56910866e-01 -8.36990118e-01

3.81893069e-01 -2.95753241e-01 -1.21713333e-01 3.00146073e-01

-5.30965388e-01 2.08905041e-01 -9.74384919e-02 -5.44581294e-01]

Visualizing with PCA

One common method is to visualize the data is to use PCA. Firstly, you project the data in to a lower dimensional space and then visualize the first two dimensions.

# fit a 2d PCA model to the vectors

X = model[model.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

This then creates the result variable which contains the projected data.

# create a plot of the projection

fig, ax = plt.subplots()

ax.plot(result[:, 0], result[:, 1], 'o')

ax.set_title('Tweets')

plt.show()

This does not really tell us that much. It is difficult to identify differences in the Tweet/Word groupings. That is where t-SNE comes into its own.

Visualizing with t-SNE

We take the X variable, which is the Word2Vec model and pass it to the t-SNE algorithm. This can be a time consuming task.

#t-SNE

from tsne import tsne #Import the t-SNE algorithm

Y = tsne(X, 2, 50, 30.0)

Preprocessing the data using PCA...

Computing pairwise distances...

Computing P-values for point 0 of 6616...

Computing P-values for point 500 of 6616...

Computing P-values for point 1000 of 6616...

Computing P-values for point 1500 of 6616...

Computing P-values for point 2000 of 6616...

Computing P-values for point 2500 of 6616...

Computing P-values for point 3000 of 6616...

Computing P-values for point 3500 of 6616...

Computing P-values for point 4000 of 6616...

Computing P-values for point 4500 of 6616...

Computing P-values for point 5000 of 6616...

Computing P-values for point 5500 of 6616...

Computing P-values for point 6000 of 6616...

Computing P-values for point 6500 of 6616...

Mean value of sigma: 0.019404

Iteration 10: error is 26.030440

Iteration 20: error is 24.907397

Iteration 30: error is 21.362837

Iteration 40: error is 19.597020

Iteration 50: error is 18.987148

Iteration 60: error is 18.698166

Iteration 70: error is 18.566039

Iteration 80: error is 18.481684

Iteration 90: error is 18.406705

Iteration 100: error is 18.349536

Iteration 970: error is 1.908643

Iteration 980: error is 1.907618

Iteration 990: error is 1.906620

Iteration 1000: error is 1.905643

We can take the t-SNE output (Y) and visualize it as a plot.

#Plot the t-SNE output

fig, ax = plt.subplots()

ax.plot(Y[:, 0], Y[:, 1], 'o')

ax.set_title('Tweets')

ax.set_yticklabels([]) #Hide ticks

ax.set_xticklabels([]) #Hide ticks

plt.show()

We can even add the word mapping back on to the t-SNE output (Y) to explore the groupings. Here is am example.

#Add the word to the groups and focus on specifc sets.

fig, ax = plt.subplots()

ax.plot(Y[:, 0], Y[:, 1], 'o')

ax.set_title('Tweets')

ax.set_yticklabels([]) #Hide ticks

ax.set_xticklabels([]) #Hide ticks

words = list(model.wv.vocab)

for i, word in enumerate(words):

plt.annotate(word, xy=(Y[i, 0], Y[i, 1]))

plt.show()

You are thn able to see the words linked to the output. In this example, some of the groupings represent usernames and web site links.

That's it for this post. I'll follow-up in a later post about how to use unsupervised machine learning to identify and label each visualise distribution.